AI Act Series #4 AI Regulatory Sandbox

16 September 2024

How the AI Act enables real world testing of innovative AI systems ?

The European Artificial Intelligence Regulation (“AI Act”) establishes the first comprehensive EU-wide framework of regulatory sandbox as a measure to foster innovation, accelerate market access for AI systems, improve legal certainty and contribute to ‘evidence based regulatory learning’.

Definition and Benefits

What are regulatory sandboxes?

Regulatory sandboxes enable, under strict regulatory oversight, the testing and refining, on a voluntary basis, in a real-world controlled environment, of new technologies that do not yet exist in the market and for which the risk classification might still be uncertain. Such framework aims to guide and support innovators in implementing compliance by design, thereby minimizing the risk of products or services being abandoned at an early stage due to the associated risks.

Key benefits of AI regulatory sandboxes.

The decisive benefit expected will be improved access to capital and accelerated market access thanks to improved legal certainty arising from AI regulatory sandboxes. To this end, the AI Act provides for the competent authorities to provide legal guidance and the option to refrain from imposing enforcement action. If no derogations or exemptions from the applicable legislation will be granted, the competent authorities will have a ‘certain flexibility in applying the rules within the limit of the law and within their discretionary powers when implementing the legal requirement to the concrete AI project in the sandbox’¹.

Start-ups will have priority access to preferential conditions to benefit from AI regulatory sandboxes.

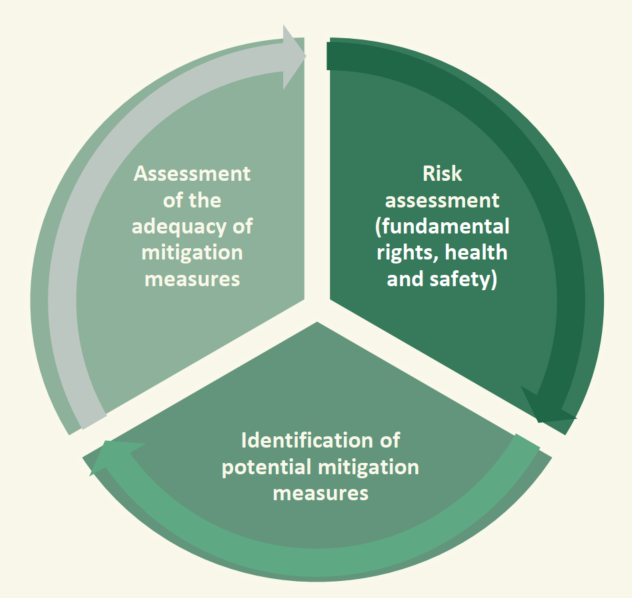

A documented testing process to speed up conformity assessment. As a controlled environment set up for a limited time before AI systems being placed on the market or put into service, AI regulatory sandboxes are framed by a ‘specific plan’, mutually agreed by the provider and the competent authority. Where the sandbox includes supervised real world testing conditions, specific terms and conditions must be set out with a particular emphasis on the protection of fundamental rights, health and safety.

The sandbox can give rise to confidential documentation that providers may use to demonstrate their compliance with the AI Act. This includes a ‘written proof’ of the activities successfully carried out and an ‘exit report’ detailing the activities carried out in the sandbox and the related results and learning outcomes. This documentation must be taken positively into account by market surveillance authorities with a view to accelerating conformity assessment procedures.

Liability and limitation of administrative fines

Providers participating in a sandbox will remain liable under applicable law for any damage inflicted on third parties as a result of the experimentation. However, if the participant observes the specific plan and the terms and conditions of the sandbox and follows in good faith the guidance of the competent authority, no administrative fines may be imposed for potential infringement of the AI Act. Also, where other competent authorities responsible for other law are actively involved in the supervision of the AI system (e.g. data protection authority) and provide guidance for compliance, no administrative fines can be imposed regarding that law either.

Further processing of personal data

The AI Act provides a legal basis to use personal data collected for other purposes for developing in the public interest certain AI systems in a limited list of areas including public safety, public health, environment protection, or energy sustainability. In addition, such a derogation to the principle of purpose limitation pursuant to the GDPR is subject to an important number of restrictive conditions with regard, for example, to the necessity of the data, the implementation of an effective monitoring mechanism to identify high risks to the rights and freedoms of the data subjects as well as a response mechanism to promptly mitigate those risks.

Testing outside regulatory sandboxes

The AI Act provides for a specific regime enabling the testing of high-risk AI systems in real world condition, without participating in an AI regulatory sandbox, subject to the implementation of robust guarantees including, inter alia, the informed consent of natural persons participating in the test, this consent coming in addition to the consent of data subject pursuant to the GDPR. To enable the oversight by competent authorities, specific requirements will have to be satisfied, including the obligation to set out a real-world testing plan, to register the testing in a dedicated EU database, or to ensure the effective reversibility of the decision of the AI system.

Next steps

The European Commission will adopt implementing acts specifying the detailed arrangement for the establishment, development, implementation, operation and supervision of the AI regulatory sandboxes. Especially, these forthcoming rules will clarify the eligibility criteria, the procedure for the application for, selection of, participation in and exit from regulatory sandboxes, and the participants’ rights and obligation to be reflected in the applicable terms and conditions.

[1] European Commission, ‘Impact Assessment of the Regulation of Artificial Intelligence’, SWD (2021) 94 finale, 60